SOURCE: QUARTZ

“There are two kinds of propaganda,” wrote Aldous Huxley in 1958 in Brave New World Revisited, a retrospective on his famous novel:

rational propaganda in favor of action that is consonant with the enlightened self-interest of those who make it and those to whom it is addressed…

(in other words, arguments couched in facts and logic)

…and non-rational propaganda that is not consonant with anybody’s enlightened self-interest, but is dictated by, and appeals to, passion.

This latter kind, Huxley went on

avoids logical argument and seeks to influence its victims by the mere repetition of catchwords…

Make America Great Again!

…by the furious denunciation of foreign or domestic scapegoats…

Lock her up! Lock her up!

…and by cunningly associating the lowest passions with the highest ideals, so that atrocities come to be perpetrated in the name of God and the most cynical kind of Realpolitik is treated as a matter of religious principle and patriotic duty.

To Huxley’s readers, most of whom had lived through

the era of Hitler, Mussolini, and Stalin, these methods would all have

been familiar. But over time it came to seem, at least in the West, as

if his “rational propaganda”—still possibly misleading, but nonetheless

rooted in the language of reason and fact and enlightened

self-interest—had won out as the primary form of political discourse.

And then 2016 happened. Voters chose Brexit and

Donald Trump even though it was patently clear that Brexit hadn’t been

thought through and that Trump wasn’t fit to be president. “Expert,” a

term for someone who specializes in facts, became a pejorative. Politicians lied with seeming impunity, no matter how blatantly and how often the press caught them doing it.

So what went wrong?

The Naïve Pursuit of Reason

The standard answer is that many things had

gone wrong. The global financial crisis. Jobs lost to globalization

and automation. Widening inequality. Terrorist attacks. Refugees. And

complacent, technocratic elites who spoke self-assuredly of growth and

progress while failing to notice how many people saw little of either.

That, goes the story, opened the way for unscrupulous populists to

appeal to voters’ base emotions, making them ignore facts and logic that

would normally be obvious to anyone.

But this narrative, while not untrue, contains a

weakness. It’s the assumption that being able to appeal to voters’ base

emotion is an anomaly, something that happens only at times of great

national stress, and that under “normal” circumstances (whatever those

are), facts and reason can and do prevail.

In reality, maybe what’s anomalous is for facts and reason to have the upper hand.

The belief, or rather hope, that humankind

is ultimately rational has gripped Western politics at least since

Descartes, and inspired such 19th-century optimists as Thomas Jefferson

and John Stuart Mill. “Where the press is free, and every man able to

read, all is safe,” Jefferson famously wrote.

But in recent years we’ve learned much about the

human mind that contradicts the view of people as rationally

self-interested decision-makers. Psychologists have established that we form beliefs first and only then look for evidence to back them up. Research has turned up apparent physiological and psychological

differences between liberals and conservatives, and found evidence that

these differences have ancient evolutionary origins. It

has identified the “backfire effect,”

a.k.a. confirmation bias, in which people hew to even more strongly to

an existing belief when shown evidence that clearly contradicts it.

(What, one wonders, would being shown the internet have done to

Jefferson’s faith in a free press—killed it, or made it stronger?)

Other research has

looked at the habits of highly effective propagandists such as China,

Russia, and alt-right icon Milo Yiannopoulos. The main takeaways: truth,

rationality, consistency, and likability aren’t necessary for getting

people to absorb your viewpoint. Things that do work: incessant

repetition, distractions from the main issue,

sidestepping counterarguments rather than refuting them, using

“peripheral cues” to establish credibility or authority, and

antagonizing people who dislike you in order to get the attention of

people who might like you. Another favorite technique, this one

perfected by the tobacco industry: strategically sowing doubt in something for which there’s overwhelming evidence.

Somehow, these methods must be playing into our heuristics—ancient cognitive shortcuts humans or our ancestors evolved as survival instincts, probably long before we had language and reason.

They’re also a perfect summary of the Trump playbook.

The left—and the left-leaning mainstream media—failed to grasp this, argues George Lakoff,

a Berkeley cognitive linguist. They kept trying to refute right-wing

populists’ arguments, check their facts, and point out inconsistencies

in their positions. That is why leftists were caught unawares by Brexit

and Trump’s victory and still flounder in dealing with him.

Lakoff, who is best known for his work on how the

metaphors we use influence our beliefs, argues that people tend to vote

in line with their values, not rational beliefs. And he has advanced an interesting claim: that

rightists are inherently better than leftists at appealing to people’s

values, because of what, according to him, they tend to study at

college:

If you’re a conservative going into politics, there’s a good chance you’ll study cognitive science, that is, how people really think and how to market things by advertising. So they know people think using frames and metaphors and narratives and images and emotions and so on… Now, if instead you are a progressive…you’ll study political science, law, public policy, economic theory and so on…

What you’ll learn in those courses is what is called Enlightenment reason, from 1650, from Descartes. And here’s what that reasoning says:… [I]f we think logically and we all have the same reasoning, if you just tell people the facts, they should reason to the same correct conclusion. And that just isn’t true. And that keeps not being true, and liberals keep making the same mistake year after year after year.

The 21st Century: Propaganda on Steroids

The dictators of the early 20th century knew all

about repetition, distraction, antagonism, and so on, even if they

didn’t know the science behind them. That’s why, as we saw at the start,

Huxley’s description of non-rational propaganda so neatly matches

Trump’s verbal tics. As the historian Timothy Snyder observes in his recent book On Tyranny,

Trump’s methods for undermining truth are very similar to those

identified by Victor Klemperer, a scholar and diarist who lived through

Hitler’s Germany and the Soviet aftermath.

What’s changed since then is, of course, the

internet, and the many new ways it creates for falsehoods to reach us.

The power of populism today lies in its ability to combine 20th-century

propaganda techniques with 21st-century technology, putting propaganda

on steroids.

Here are the main ways that happens:

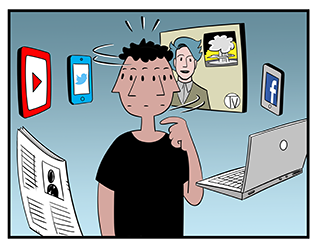

- Echo chambers. Social media and the explosion of choice in news sources has exacerbated our tendency to clump into like-minded groups. We see far more messages that reinforce our beliefs than challenge them. That’s because the platforms through which we find most of what we see online—social networks such as Facebook, Twitter, and Weibo and search engines such as Google, Yandex, and Baidu—have business models that require them to maximize the time we spend on them.

- Alternative news sources. Whether it’s Breitbart for the alt-right or RT for the Kremlin, it’s now possible to create large, well-financed operations that pump out news with a strong agenda and can reach people across the world. (Russian TV is particularly good at appealing to heuristic thinking, argues Maxim Alyukov, a Russian sociologist.) In fact, just one person can become an alternative news source—look at Trump’s Twitter account. This proliferation of sources doesn’t just have the effect of overloading people with competing versions of the truth. They can also change the news cycle, determining what gets attention and what doesn’t, forcing other media to chase stories they might otherwise ignore and neglect those they should be paying attention to.

- Fake news. Even the most tendentious news sources tend to stop shy of outright falsehood, but some—such as those notorious Macedonian teenagers who spread made-up pro-Trump stories during the US election campaign—deal in nothing else. Whereas sites like Breitbart and RT have a political agenda, these are essentially commercial parasites on politics, creating low-cost, sensational content to draw clicks and make money off ads. But by adding to the distraction and confusion, they contribute to further undermining the consensus on truth.

- Online swarms. If you have a fiercely loyal base of supporters (Trump, Yiannopolous) or can pay them (Russia, China) you can mobilize vast groups of people to troll opponents and flood the digital airwaves with your desired message, amplifying it and making it hard to tell how much support it really has.

- Bots. Automated social media accounts are also being pressed into service to both amplify messages and quash them. As technology improves they’ll become ever harder to distinguish from real people.

- Psychological profiling and targeted advertising. In its by-now infamous ”emotional contagion” study of 2014, Facebook showed that it’s possible to influence people’s moods in precise, predictable ways by putting certain words into the posts they see on Facebook. In an only slightly less controversial study the company showed that it could change people’s likelihood of voting. Companies such as Cambridge Analytica claim to be able to sway voters’ preferences en masse, using publicly available data skimmed from people’s social-media accounts to build detailed psychological profiles and crafted messages for each person. “Weaponized AI propaganda,”

We’ve launched a new Quartz obsession with propaganda

to follow how the combination of these technologies with old-fashioned

spin and demagoguery are changing politics and public discourse.

Huxley, it must be said, foresaw none of this. He devoted much of Brave New World Revisited to assessing which of the futuristic methods of persuasion in Brave New World,

written 26 years earlier, might be on the way to coming true. He

wrote chapters on “brainwashing,” “chemical persuasion,” “subconscious

persuasion,” and even mass hypnosis—prophecies that now seem somewhat

quaint.

Yet one, almost throwaway paragraph about the world

of the 1950s rings stunningly true 60 years later (emphasis mine):

In regard to propaganda the early advocates [like Jefferson] of universal literacy and a free press envisaged only two possibilities: the propaganda might be true, or it might be false. They did not foresee what in fact has happened, above all in our Western capitalist democracies—the development of a vast mass communications industry, concerned in the main neither with the true nor the false, but with the unreal, the more or less totally irrelevant. In a word, they failed to take into account man’s almost infinite appetite for distractions.

The central point of Brave New World was that—contrary to what George Orwell would suggest 16 years later with 1984—governments

didn’t need to be totalitarian to exercise social control. Our “almost

infinite appetite for distractions” could render a population

politically helpless. Of all Huxley’s warnings, that was the most

prescient.

No comments:

Post a Comment